Data cleaning in python using pandas

Data cleaning is a very important part of any data science project as data scientist spend 80% of their time is this step of the project. But not very much attentions is given to the cleaning process and not much research efforts are put to create any sort of framework recently I came across an amazing paper titled as Tidy data by Hadley Wickham in Journal of Statistical Software in which he talks about common problems one might encounter in data cleaning and what a Tidy data looks like I couldn’t agree more to him, he has also created a R package reshape and reshape2 for data cleaning, but the problem was the paper had very little to no code I also found the code version of the paper but it was in R, while most of my data cleaning work is done in pandas, I had to translate all those R solutions to pandas equivalent, so in this post the I will summarize all the main idea of the paper that the author suggests in the paper and also how we can solve it in pandas.

#Basic terminology

It’s good to define terms that bring reads and writer on the same mindset. A data-set is a collection of values, usually either numbers (if quantitative) or strings (if qualitative). Values are organised in two ways.

- A Variable contains all values that measure the same underlying attribute (like height, temperature, duration) across units.

- A Observation contains all values measured on the same unit (like a person, or a day, or a race) across attributes.

Every value belongs to a variable and a observation. To make the terms more clear let consider an example of a user information example below:

| Name | Age | Country | Gender |

|---|---|---|---|

| Gustavo | 24 | Colombia | Male |

| Hussain | 34 | India | Male |

| Messy | 35 | Argentina | Male |

| Teresa | 45 | India | Female |

The above data-set have 16 values representing 4 variables and 4 observation. Which can be broken down as follow:

- There are 4 variable (name, age, country and gender)

- Gender variable has two possible values(M/F) these kinds of variable are generally call categorical variables

- Country variable as 3 distinct values.

Its really important to understand the terms observation and variable, as they will be extensively used in upcoming sections.

#What is tidy data?

Once you have understood the concept of observation and variable we are ready to define what is tidy data. In tidy data:

- Each variable forms a column.

- Each observation forms a row.

- Each type of observational unit forms a table.

This is Codd’s 3rd normal form (Codd 1990), Messy data is any other arrangement then this form. One might use various methods to extract variable but this might slow the analysis and invites error. Mapping rows, columns and tables to observation, variables and types will provide a good conceptual framework to map an entity to a functional form, for example, if you are are building a model to predict housing price based on features like location, area, number of rooms, etc. Price variable becomes a function of other variables of the table.

The author suggested that there are mainly five problems commonly observed in messy data which are as follows :

- Column headers are values, not variable names

- Multiple variables are stored in one column

- Variables are stored in both rows and columns

- Multiple types of observational units are stored in the same table

- A single observational unit is stored in multiple tables

for the rest of the post we will discuss what these problems look like and how to fix them.

#Five most common problems with messy data-sets:

#Column headers are values, not variable names

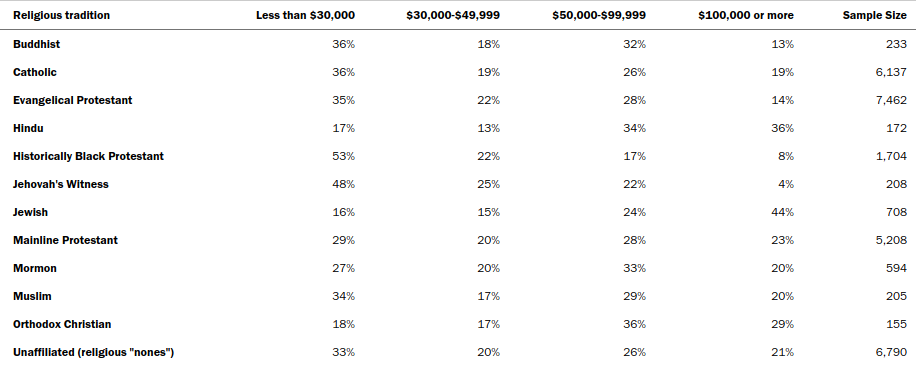

To better illustrate this problem lets consider are pewforum.org Income data of various religious group in the US. First 12 rows of the data is shown below

As you can see there are 6 columns which are religion, 4 income groups and sample size. You can see the problem here income variable is stored in the column header, just 3 variables religion, income and sample size would have been sufficient, the income variable would have 4 possible values ( <30k / >30k and <50k / >50k and <100k / >100k).

There are two main arguments as to why this form of representation is called messy :

1. Data in this format are usually designed for presentation not ideal for analysis.

2. Variables are presents in both columns and rows.

To solve this problem these issue there is a melt function in pandas which can be accessed as pandas.melt to perform this operation we need two parameters id variable(id_vars) and value variables(val_vars), id variable will we the variable to which the columns headers will be mapped, in this example we will choose Religious tradition as the id variable to 4 different values of income groups as value variables. You can see the problem of repeated values in Religious tradition variable which was mentioned before.

1 | # religious_df is the dataframe which stores above table |

There are good sides to this kind of data which are:

1. Values are sorted efficiently as there are no repeated values as you can see the tidy form of data below has repeated values, this problem can be dealt with table normalization.

2. Matrix operation can be done efficiently for example if we had another data of income of religious groups of Europe and we wanted to combine the data of both countries in one dataframe then simple matrix addition operation followed by value normalization would have solved the matter.

#Multiple variables are stored in one column

This is usually due to the way data is represented, person’s full name is an example of this problem. Full name has 3 possible variable first name, middle name and surname, the first name indicating the person and middle name can point to another row in the table and surname can help you to find the group of people belonging to the same family for example in kaggle titanic data we can extract this column from full name. This procedure is similar to feature engineering sometimes, the gender variable must be missing but it can be extracted from the full name if the name has the title (Miss / Mr / Mrs / Ms).

Solution to this problem is to split the column having multiple variable into individual variable, in the full name example its simple as string split but in some case you may have to apply so logic. pandas has apply function which takes a function as a parameter, this function has the algorithm that runs on data to get derived value, apply function iterate thought each row and run the function passed on each element of the column.

1 | In [1]: titanic_df = pd.read_csv('../input/train.csv') |

#Variables are stored in both rows and columns

Variable stored in the column is the problem we have solved be before then why does is need another section to discuss it again. This is because if the variable is in the row then there will be a column which will hold the value of that variable, and that column will be sort of variable itself. Let take an example of Global Historical Climatology Network for one weather station (MX17004) in Mexico for five months in 2010.

Solution to this problem is to file melt the columns on the value columns using pandas melt function and then pivot the table on the row which has the variables element column for this example using pandas pivot_table method. Below is the code

1 | In[1]: station_df = pd.read_csv('station.csv') |

below are the first few rows of the cleaned data

| element | year | month | day | tmax | tmin |

|---|---|---|---|---|---|

| 0 | 2010 | 1 | 1 | 30.0 | 3.0 |

| 1 | 2010 | 2 | 1 | 45.0 | 34.2 |

| 2 | 2010 | 2 | 2 | 27.3 | 14.4 |

| 3 | 2010 | 2 | 3 | 24.1 | 14.4 |

| 4 | 2010 | 2 | 6 | 20.0 | 21.0 |

#Multiple types of observational units are stored in the same table

This kind of problem arises due to the fact that multiple types of observation units are stored in the same table, this leads to the problem of duplicate values in dataframe so much so that size of the dataframe increases considerably. This concept is similar to that of database normalization where each fact is expressed in one place and if that fact has to be used somewhere else then the reference ID of that table is used.

let take an example of the billboard weekly ranking of the songs dataset set, this dataset has two type of observation unit, the songs and the weekly ranking. This is the reason there is repetition in values of artist, tract and time variables. We need the break down this dataset into two dataframe songs and weekly rank

1 | In [1]: weekly_rank_df = pd.read_csv('songs.csv') |

#A single observational unit is stored in multiple tables

It’s not very unusual to find this kind of problem same observation unit is spread across the various database, file format or different file, usually they are separated by another variable like the year, person etc. For example, the baby dataset top 129 name is in a different file, each file for a year so essentially each file depicting the year variable. Combining the data is not much difficult if the data source but the variable names remain consistent, but it becomes challenging when the data structure changes over time. Nonetheless, pandas is the perfect tool to deal this kind of problem for the following reason:

- It has methods to import data from a various source. You can find pandas.read*_ functions that can import data from JSON, csv, database and various other file formats.

- once we have imported the data in the dataframe pandas has various indexing mechanics like multilevel, hierarchal indexing to pick the observations of interest based on some value of the indexed variable.

- pandas also have methods to merge different dataframe based on their indexing, as we discussed in the previous point indexing can not necessarily be a number but can be any shared variables of choice.

#Conclusion

We saw what a messy data is and defined what a tidy data should look like. We also discussed 5 most common problems of messy data and for each problem we discussed the messy table structure and who how we can fix it using few lines of pandas library.