Implementing K-NearestNeighbour algorithm from scratch in python

K-Nearest Neighbour is the simplest of machine learning algorithms which can be very effective in some cases. The objective of the post it to implement it from scratch in python, you need to know a fair bit of python for and a little bit of numpy for the faster version of the algorithm. Once we have implemented the algorithm we will also see how to improve the performance of the algorithm. As there is no single invincible algorithm, we will look into advantage/disadvantage of the algorithm, this will help us to decide on when to use the algorithm. Alright, then let’s get straight into it.

#What is K-Nearest Neighbour Algorithm (KNN)?

KNN is a supervised learning algorithm it means we need some label training data to use the algorithm. KNN is also called non-parametric algorithm as it makes no explicit assumption about the form of data, unlike any other parametric machine learning algorithm it does not have to estimate any parameter like the linear regression for it to work. It is also a lazy algorithm as the algorithm doesn’t run until you have to make the prediction.

In a typical machine learning algorithm, we are trying to predict the certain outcome base on data points (ML folks call it features). For example, if we are trying to predict the type of Iris flower based on petal height, width and sepal height, width. So we have 4 feature here and we are trying to classify the type of iris flower(assuming its a classification problem). If we plot feature on the graph and when we have to make the prediction for a data point, we try to find the nearest K data point and take the average value of the outcomes of those nearest data points.We can use KNN for both regression and classification problem. They both use the nearest data point in a different way to so their respective problem. If it’s the classification problem then we take the class that occurs the most in the K data points. And if it’s the regression problem then we take the average of the values of the K data points. There are also other variants of the KNN which is called weighted KNN which we take weight average of the K data points for both classification and regression problem. K in KNN is the positive natural number i.e. K > 1, its the number of neighboring data points to consider when deciding the result. It’s a critical component of the algorithm which we will see how to choose.

When I say nearest data point, how do I calculate the distance between points, it’s an interesting question. Well, it’s very simple to calculate the distance between two points in one dimension like the distance between 5 and 12 by simple subtraction it’s 7 but in higher dimension we use Euclidean distance to measure the distance between two points, there are other approaches as well like man-hantten distance, cosine distance, and hamming distance. The goal here is to find similarity in data points, finding similarity in higher dimension is a challenge in itself as we progress with the post we will see why.

#Calculating distance between data points

We first need to calculate the distance between two data point for any given dimension, once we have that function we will use of calculating the distance between test data points to every other data point in the training set. As we are using Euclidean distance, it can be defined as the square root of the square of the difference between two points. Below is the mathematical formula for calculating the distance between two points.

$$dist = \sqrt{(x_1-y_1)^2 + (x_2-y_2)^2 + … + (x_n-y_n)^2}$$

$$dist = \sqrt{\sum_{i=1}^n (x_i-y_i)^2}$$

Python implementation for the above equation is as below

1 | def euclidean_distance(data_point1, data_point2): |

Now that we can calculate distance between two data point we are now interested in finding K nearest neighbouring data points, for that we first find the distance between one point and every other point and sort the distance in ascending order and take the first K points, those will be the data point we are interested to be used to calculate our next result.

1 | def get_neighbors(train_set_data_points, test_feature_data_point, k): |

let try to test the above function with some data points and see if it’s doing its job right.

1 | euclidean_distance([1,2],[2,3]) |

Since these are the common methods that can be used with both version of KNN classification and regression it would be nice to put in KnnBase class then this class can be inherited by KnnRegression class which implements KNN regression algorithm and KnnClassifier which implements KNN classification algorithm. API will be a sklearn style which has parameter initialization in class constructor and class has fit and predict method. If you are following along you can just copy-paste the code if you are feeling lazy to type it.

1 | import math |

#Implementing KNN classifier

Predicting the outcome of the KNN classification algorithm is different than that of regression. For classification problem, we take votes of K closest neighbor and the class that has highest votes wins.

1 | class KnnClassifier(KnnBase): |

#Implementing KNN Regression

For regression setting, we just take the average of the output of the K closet training data points.

$$output = {1 \over K}{\sum_{i=1}^n (y_i)}$$

1 | class KnnRegression(KnnBase): |

#Optimized the computation (KNN vectorized version)

Above implementation which we saw using python array is very memory hungry and very slow which will be obvious when you run this algorithm on large dataset. To mitigate that we can vectorize the array operations using numpy library which can do vector computation much faster.

1 | def get_neighbors_v(train_set, test_set, k): |

to compare the performance of both the function we will use ipython’s %timeit magic function and run the function for 10000 time and compare the results. So fire a ipython console and type the following code:

1 | In [1]: from sklearn import datasets |

as you can see the result are 40x faster. Crude implementation using python’s native array takes 674µs to execute while vectorized version takes 17.3µs to execute 10000 function calls. You can replace the get_neighbour function code with the vectorized version in the KnnBase class to take advantage of vectorized version of the code.

#Evaluate the performance of the KNN

Evaluation the performance of the model is a topic in itself and entire books are written on the topic so I will try to keep it simple. Algorithm evaluation is how good our model performers based on certain metrics which will discuss, evaluation techniques are different for classification and regression model.

For regression model there are various metrics that can be used, one such metrics is Root mean square error (RMSE) which is the measure of the standard deviation from the ground truth which is a scale dependent. If we want something scale independent then we can use Mean absolute percentage error(MAPE) it expresses the accuracy in percentage. A small RMSE/MAPE means a good model and large means, bad model. Below is the code for calculating for both RMSE and MAPE metrics.

1 | def get_rmse(y, y_pred): |

For KNN classification settings usually we use metrics like precision, recall, and f1 score but for sake of simplicity, we will measure the percentage of data that is correctly classified so if only 10% of the data is correctly classified we say that the model is 10% accuracy. A larger value is better. Below is the implementation.

1 | def get_accuracy(y, y_pred): |

#Data pre-processing

One problem one might run into using KNN is that the feature vector might be on different scale, for example, you have a features like height, weight, and daily expense, height is on inch scale whose value ranging from 2 to 100 , weight is on kg scale whose value might range in 10 to 200 and daily expense is on dollar that might range from 0 to million who knows.

A feature that has large scale can influence the decision of the algorithm even though the feature itself in is not actually contributing anything to the output. To tackle this problem we can bring each feature on same scale by either scale the feature in the 0 to 1 range using Normalization or use Standardization method that transforms the feature such that it has mean 0 and standard deviation 1 but to using standardization the assumption is that the feature follows normal distribution so use standardization only if the feature satisfies the assumption. For feature normalization, we will use sklearn MinMaxScale class.

#Choosing optimal K for KNN

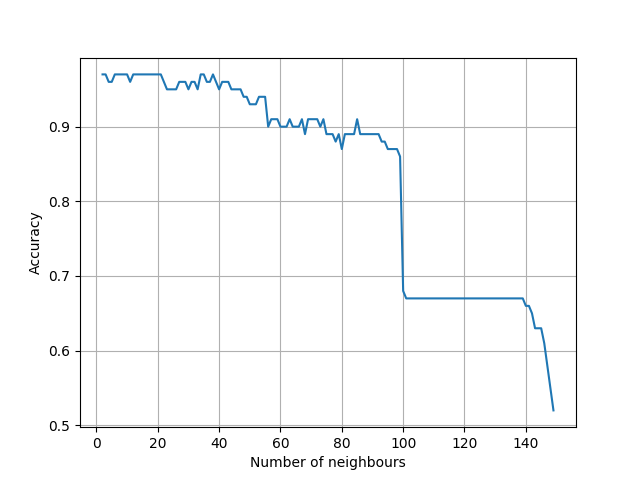

To get high performance for the model it is important to choosing the optimal value of K. So K is the tuning parameter for KNN algorithm. Since we know how to assess the performance from the above section we can plot the value of K vs the evaluation metrics and choose the K which gives maximum performance. Below is the code to do so.

For this example we will use iris data set which is a classification problem to try the concept :

1 | import numpy as np |

If you see the plot performance varies for different values of K, for K=2 we only consider nearest 2 data point and for K=len(iris.data)(all the data point) which is the average prediction for all data point performance varies and max value is 98% for K=13, performance decline as K increases and there is sharp decline after K=100 and 140 and its lowest for K=len(iris.data) which is 55%. We can use this same technique for regression class of problem by plotting.

#Bias vs Variance trade-off

As we saw the of the value of K has a drastic effect on the performance of the algorithm. For K=1 the decision boundary is highly flexible since the value of the nearest data point is directly applied so for this setting algorithm has low bias and high variance but as the value of K increases decision boundary becomes more and more inflexible i.e close to linear and this is close to high bias and low variance. Ideally, we want to have both bias and variance to be low but it can’t be so we are looking for a trade-off that in our iris data set was achieved for K=13 where we get optimal performance.

#Advantages

- If you are using linear regression or other statistical learning methods than those algorithms have some assumption on the data set like they should be normally distributed or error should be uncorrelated etc. but for KNN there is no such assumption made about the data.

- For KNN there are is only one parameter to tune i.e K, unlike regression where if you have N feature then there might be at least N parameters to tune.

#Disadvantages

-

Machine learning algorithms that work in low dimension might fail to produce the same result for high-dimensional setting especially similarity base algorithm like KNN. A good intuition behind this is very well explained by Pedro Domingos in one of his research paper which is as follows :

our intuitions, which come from a three-dimensional world, often do not apply in high-dimensional

ones. In high dimensions, most of the mass of a multivariate Gaussian distribution is not near the mean, but in an increasingly distant “shell” around it; and most of the volume of a high-dimensional orange is in the skin, not the pulp. If a constant number of examples is distributed uniformly in

a high-dimensional hypercube, beyond some dimensionality most examples are closer to a face of the hypercube than to their nearest neighbor. -

Another disadvantage of the algorithm is it only uses data points around the predicting points to make a decision and rest other data point are useless.

#Conclusion

We saw how we implemented the algorithm in python and also looked on how we could get maximum performance by choosing optimal value of K. We haven’t looked into everything related to KNN as there are family of algorithms that are based on KNN which fix the drawback we just discussed like weighted KNN which instead of just taking values on 0/1 from nearest neighbour it takes weighted average of the data points.